One day, I bought two packs of water bottles. The packs had a special offer sign on them!

And a barcode that leads to web link to participate in a contest, the prize was 100 AED to be spent in a coffee while watching a world cup match, and some saving vouchers up to 500 AED.

So, I said why not! let’s have a try! I am a lucky person 🙂

I scanned the barcode, then I navigated to the web page. They requested the following info:

- My name and my contact info, email, and the mobile number.

- an image of the water bottle packs

- an image of the receipt as well

They needed the images to verify that I bought their product and within the competition’ time range so, I took the images on my phone and submitted that data successfully!

I got a message that my data has been received and I have to wait until they verify the information, then I will be considered as a participant in the contest.

Luckily! I got a phone call later and I won the prize!

I was curious! how was the validation process?🤔

Was it manual?

Or did they use some AI technology to verify the images?

If it’s manual, what if thousands of people decided to submit the images to participate?

Did they consider the scale challenge in terms of storing the images and responding to people in a reasonable period of time?

So, I decided to build a cloud solution on Azure to verify the images submitted by end users.

This is the Azure Cloud Scenario for a Marketing Campaign with some AI things on it. You can check more Cloud Scenarios here.

I want to make sure that this cloud solution will increase the Business Agility and Time to Market! and help the Marketing Team get the target of the Marketing Campaign.

To implement this scenario, I have created some business requirements based on the story above:

Business Requirements

A Water Company “Super Water” is planning to conduct a marketing campaign with a special prize to attract more customers. Each customer who buys a water bottle or more can apply for a contest.

The customer should submit:

- a photo of the bought product

- a photo of the receipt

- contact information email + phone number

The system will verify the photos:

- The first photo should include a water bottle or a pack of water bottles, with the logo of the company

- The second photo for the receipt should include the transaction date which is within the campaign period range

- The second photo should also contain at least one product item that is one of the company’s products.

If both photos passed the validation process, the customer is a valid participant in the contest to win the prize.

The expected number of users might be 1000,000 during the contest. The contest period is 1 month. Our marketing team is planning to target people in UAE during the contest. We still have 2 months to lunch the campaign and everything should be ready at least one week before to test and validate.

We need to build an application that satisfies the business requirements and make sure it’s reliable, scalable, secure, and cost optimized. The system is expected to work properly during marketing campaign without failures. high traffic is expected!

The following parts of the post will discuss the solution architecture from different aspects. After that, I will show you how to implement the solution on Azure.

Let’s discuss the main components/services to design a solution for this Azure Cloud Scenario!

Main Components

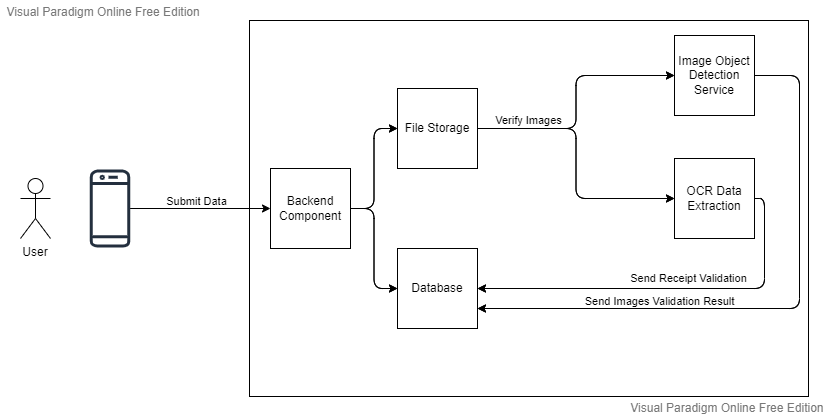

The main components are:

- Web page / Web App to enable the end users to submit the photos and the contact information.

- Backend endpoint to receive the files, the contact information, and store them in a storage.

- File Storage to store the photos.

- Machine learning service to detect the company’s product and company’s logo within a photo.

- Machine learning service to extract the data from a receipt photo.

- Database to store the customers’ contact information with the validation result of their photos.

- Event-driven architecture service that manage different internal events between other components to guarantee the reliability

Let’s draw the initial diagram and discuss different scenarios and choices.

Let’s discuss now each component with some more details:

Web Page

This is the frontend part of the solution, The page will have to support one of the three scenarios:

1- Web Page submits to Backend Server

A server rendered form page or a single page application that support:

- Validating the user inputs

- Submitting the contact information

- Uploading two images

Then, the server (backend component) will validate again and send the data to the persistent storages like file storage, database, etc..

This is more secure way, because we don’t expose the blob storage and we validate the user data before moving to the AI verification step.

One of the challenges here is that we need to pass all the uploaded images to the backend first then we store it in the blob storage, which is extra processing.

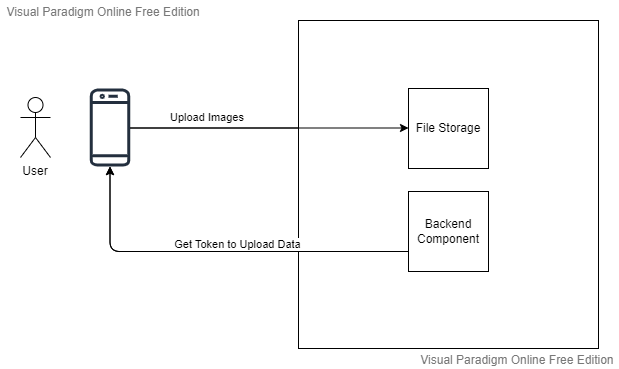

2- Web Page submits to Blob Storage

A server rendered form page or a single page application that can:

- Do the regular client side validation

- Submit the contact information

- Upload the images directly to the blob storage

In this case, the client will get a token from a backend component to gain a temporary access to the blob storage ,so it can upload directly.

This is more performance efficient way, because the data will be uploaded directly to the storage and no web proxy server needed to pass the data to the storage.

But!!, this requires to check the uploaded image and make sure it’s not a malicious data. This also may give the client the ability to upload huge files and harm the whole system.

3- Web Page locally AI verification

This is not a complete separate option, we can use this option along with option 1 or 2 above.

We can get the AI trained model and embed it inside the web page, so we can verify the images locally before uploading it to the server. We may have the choice to simply avoid uploading them unless we want to do the verification again on the server side.

I will consider the first option with or without Local AI verification

Backend

There are plenty of choices to think of in terms of the backend.

Should we use Azure Virtual Machines with a Web Server installed to receive the traffic?

Should we use Azure App Service ?

Or Maybe we can use Azure Functions?

Why not using Kubernetes?

One of the aspects we need to consider is the level of control and management/operations I want to handle as a customer verses the level I want to off load to the cloud provider.

- When I go with IaaS (Infrastructure as a Service) choice like Azure Virtual Machines, I get the essential service of compute, storage, network, security. I have to manage the infrastructure resources, manage software licenses.

- When I go with PaaS (Platform as a Service) choice like Azure App Service, I get the infrastructure resources managed by the cloud provider. Cloud features like scalability, availability are built in so, the developers focus more on developing the application on top of the PaaS services.

- When I go with Serverless choice like Azure Functions, I get the infrastructure, I get cloud features but it’s all invisible to me and completely managed by the cloud provider, I just write the code.

I will mainly depend on PaaS and Serverless choices in this scenario, because the “Super Water” company doesn’t have the capability and the staff to manage infrastructure, and we need a faster time to market by reducing the operations and increase the agility of the development team, and it’s just two months away from starting the marketing campaign.

I will consider Azure Functions (Serverless function) and PaaS services to write the business logic code

File Storage

Azure Blob Storage is the PaaS service from Microsoft to store unstructured data such as text and binary data. In our scenario, we have a one million potential participants with two images each. so I assume we have two million images to be stored and verified.

Azure Blob storage is optimized service to store a massive amounts of data.

Oh wait! we still need another storage for the contact information, and the usual way is using a database. What if there is a way to store the contact information as a user-defined data along with the images?

Luckily! Azure blob storage has an amazing feature called “Index Tag”. It enables adding key/value pair of values attached to the blob file so we can easily query and organize our files, But before we commit to use it, I have to check if it has general availability because it’s relatively a new feature.

I can check the feature availability status using this link, and it looks available! and we can use it.

For each uploaded image, we can add user defined data:

- name

- phone number

- is-verified

Wait Again !!! It may not be the right solution yet! We need to make sure that we can control the data access of the index-tag, I will discuss this more later in this blog post.

I will consider Azure Blob Storage to store the submitted images.

Object Detection in Images

Object detection in images is one of the AI computer vision models. In this service, the machine learning model is trained to classify objects within an image. It also determines a bounding box that marks the object.

It’s more advanced way of image classification where the model classifies the whole image only.

So, instead of describing what the image is, object detection describe what objects are in the image and determine their locations.

Custom Vision Service in Microsoft Azure is the cloud-based solution we need to use to create custom object detection model for two objects in the image to verify that the image has the right product:

- The water bottle

- The company’s logo

Custom Vision service will do that in two steps:

- Train our custom model to recognize different classes using existing images.

- Publish it as a service that can be consumed by our application.

I will consider Custom Vision Service to detect the product object and the log in the submitted images

Receipt Data Extraction

Form Recognizer Service in Microsoft Azure is the cloud-based solution we need to use to extract the receipts text data from the image uploaded by the customer.

We need to check:

- The transaction date of the receipt.

- If one of the receipt’s items is the company’s product name

I will consider Form Recognizer Service to extract the Transaction Date of the submitted receipt.

Database to store Customers’ Data

I think the data part of this solution is relatively simple, we have three main steps: store the data, verify the images using AI model, then storing the verification result.

We don’t have to build an advanced data pipeline, data warehousing, or reporting for now, and the data types can fit in relational or non-relational databases initially.

We have the AI verification data in JSON format and we need to store it, so we should consider non relational database more.

I will not spend a lot of effort in this part because most probably, the data will be stored in Index-Tag alone within the account storage.

I will consider using non relational database or Index-Tag feature in Azure Account Storage

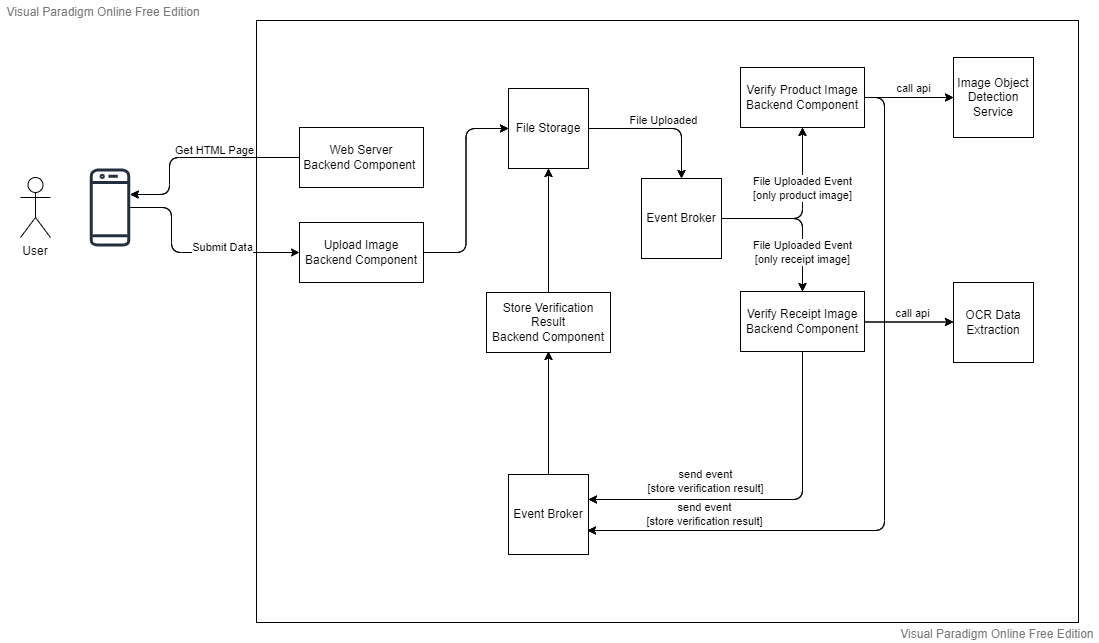

Event-Driven Service

As I discussed in the frontend side, the uploading http request will be routed to the backend component which will store the images in the blob storage, then we need to call the AI API and pass the images links to verify them, then store the verification result back to the blob storage.

That’s three main steps,

Should the end user wait until the verification step?

Should the http response waits until finishing the three steps?

Why don’t we just upload the images and return a successful message to the end user explaining that we got your data and we will call you soon?

What if the uploading step is done successfully, but the AI API step is failed? Should the whole request fail and start over!? or just try again the failed step internally?

To design more resilient and reliable solution, we can use event-driven service that support:

- Event delivery

- Decouple the system components

- Enable the components to communicate with messages

- Filter the events delivery to specific destinations that are interested in

- Send the same event to multiple receivers if needed

- Integrate with different Azure services as a source of events like account storage

Event Grid is a highly scalable, Serverless event broker that has the features above and more.

I will consider using Event Grid Service to Apply Event-Driven Architecture

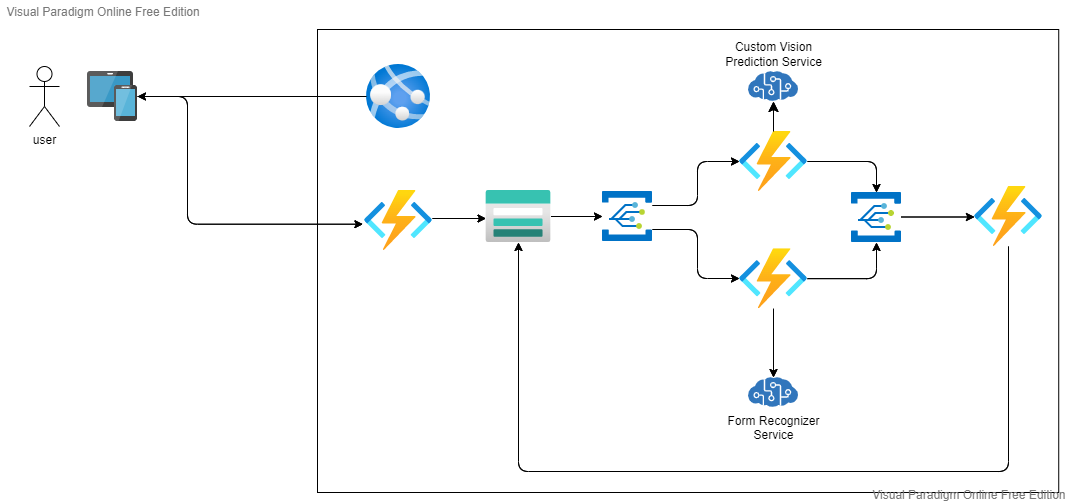

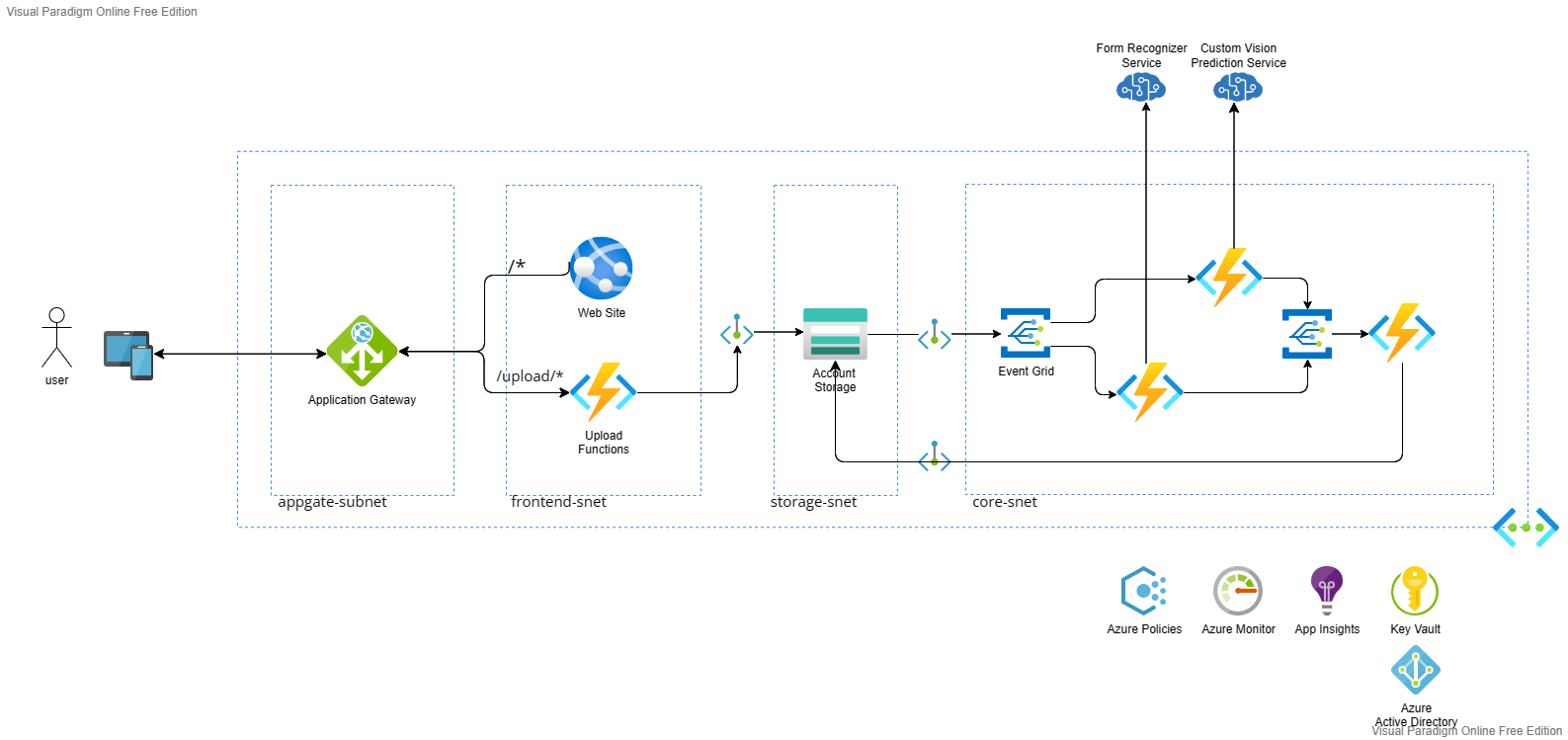

Let’s see how the diagram would look like after applying event-driven architecture and adding the event-driven service.

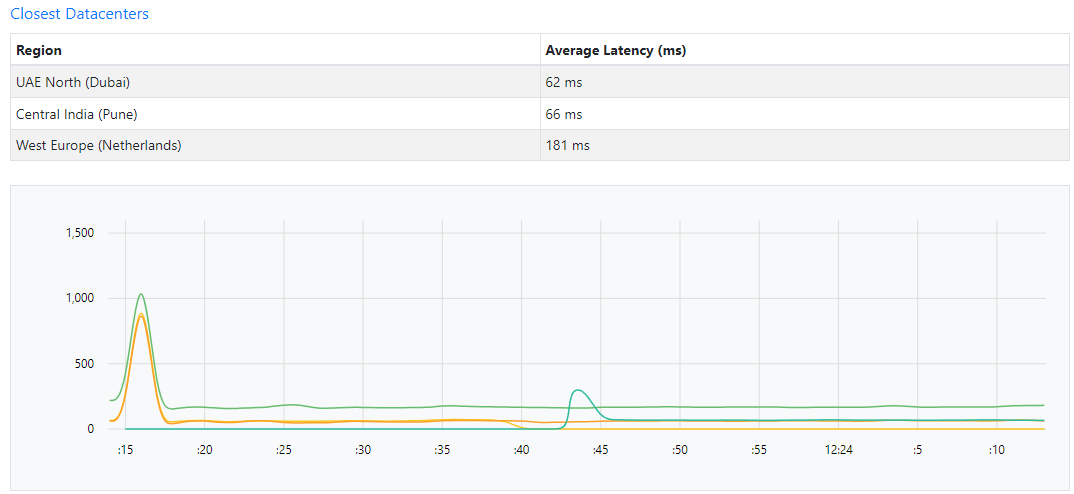

Azure Regions Selection

Selecting the region is essential for many aspects:

- Latency

- Service Availability

- Compliance and data residency

- Pricing

Latency

Our customer base is in UAE, so we need to consider Azure UAE regions to minimize the latency. We can measure the latency using https://www.azurespeed.com/Azure/Latency

I checked the latency between many regions and my IP location in Abu Dhabi. Obviously, I will consider UAE regions for that matter as the main region to be chosen.

Service Availability

Before we commit to use any specific cloud service, we need to check if it’s available in the regions we are planning to use. We can see the service availability by region on this link

https://azure.microsoft.com/en-us/explore/global-infrastructure/products-by-region.

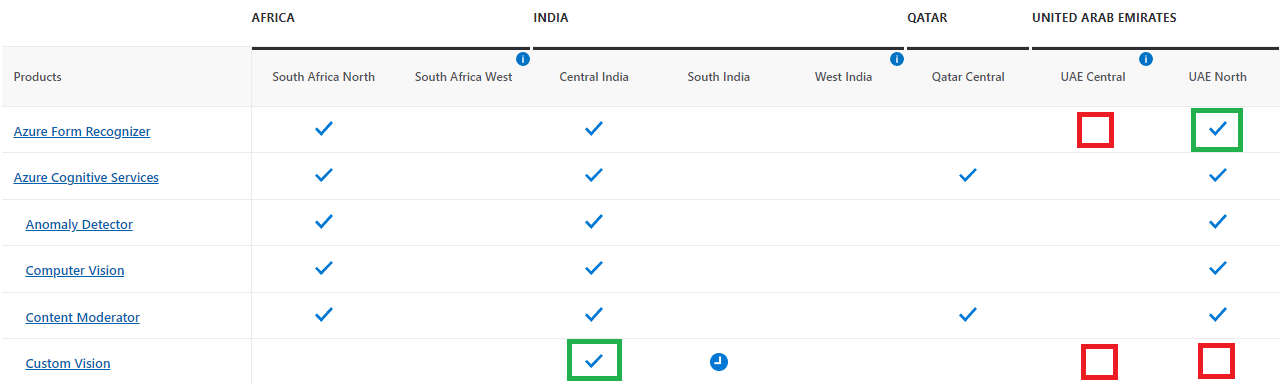

I have selected Azure regions in UAE only along with some nearby regions, and as you see in the image below, Azure Custom Vision is not yet available in UAE Regions!

Luckily enough! it’s available in Central India Region, which has a good latency based on the previous image.

Unfortunately, Custom Vision Service is not available in UAE Azure Regions yet when I wrote this post. So, I will create use the nearest region to UAE!

After checking the availability of the service, I found out that Central India Region is almost the nearest. The rest of the services are all available in UAE.

Compliance and data residency

There many important that should be considered:

- The data will reside mainly in UAE North, but the images for product will be processed in Central India region because we Custom Vision service is not available yet in UAE regions.

- Storing the contact information should be mention in the privacy policy and terms of use

The points above should be discussed with the legal team to update the privacy policy if needed and check if it’s acceptable to use any cloud service that reside outside UAE.

To recap, here is a table of the Azure Regions we will use:

| Service | UAE North | Central India |

| Custom Vision | Not Available | Yes |

| Form Recognizer | Yes | |

| Account Storage | Yes | |

| Azure Function | Yes | |

| Event Grid | Yes | |

| App Service | Yes |

Congrats! We have almost finished the initial research about the Azure Cloud Services we need. Here is the initial solution architecture diagram:

Yes, I said initial solution diagram, because It is and next section will help us improve the design even more!

Azure Well-Architected Framework Pillars

Azure Well-Architected Framework is a set of principles and best practices grouped together into five pillars. It will guide you to build and maintain a well architected cloud solution. So, I follow this framework for building my solution.

The five pillars are:

- Reliability

- Security

- Cost Optimization

- Operational Excellence

- Performance Efficiency

Reliability

Reliability can be implemented differently based on the services that compose our solution. In this example, the solution is composed of many PaaS and Serverless services, so most of the reliability is off-loaded to the cloud provider. we just need to check the SLA options of each service and see if it fits our requirements.

| Services | SLA |

| Custom Vision | 99.9% SLA link |

| Form Recognizer | 99.9% SLA link Note: I didn’t find a specific SLA for Form Recognizer, but it’s one of Azure Applied AI Services. |

| Account Storage | 99.9% for LRS (locally redundant storage) SLA link |

| Azure Function (Serverless) | 99.95% for consumption plan SLA link |

| Event Grid (Serverless) | 99.99% SLA link |

| App Service | 99.95% SLA link |

So, the overall availability of the system is 99.9% per year, which is 8.76 hours/year of potential failure, while our marketing campaign is one month so let’s see we expect 43.8 minutes/month of potential failure during the marketing campaign.

I think this suits what we need in such a scenario!

Security

Security has five main sections:

Governance and Compliance

By using some of the built-in Azure policies, I will make sure that the solution is compliant with the security standards followed in the company, here are some of the policies that will be enabled:

| Built-in Azure Policy | Description |

| App Service apps should have remote debugging turned off | remote debugging is for development environment only, and it requires some opened ports so it should be turned off on production. |

| App Service app slots should have resource logs enabled | logs should be enabled for tracing and investigations. |

| App Service app slots should only be accessible over HTTPS | only https is allowed on production to protect data in transit. |

| Cognitive Services accounts should disable public network access | Custom Vision and Form Recognizer shouldn’t be exposed to public access. |

| Azure Event Grid domains should disable public network access | Event Grid shouldn’t be exposed to public access |

Other policies can be added and we can create custom policies as well.

Identity and Access Management

- Using Azure Active Directory and Identity Access Management

Information Protection and Storage

- Data Protection at rest is provided by default

- Using Key Vault Service to store the keys and the secrets of the AI APIs

- Using SAS (Shared Access Signature) with read only access, when passing the image to AI APIs.

- Controlling the access on “Index Tag” feature in blob storage, because we are storing contact information so it should be protected and we should make sure that only who have access can query the files and read index tags data.

Network Security and Containment

- Using Virtual Network and subnets to segregate the workloads and control the access using NSGs (Network Security Groups)

- Avoid exposing the frontend layer to public directly and use Application Gateway instead

- Enable WAF (Web Application Firewall) as a part of the App Gateway to protect the app from regular web attacks

- DDOS protection basic is enabled by default.

Security Operations

- Using Azure Advisor

- Doing the Azure Well-Architected Framework Assessment

Cost Optimization

Estimate Using Azure Calculator Tool

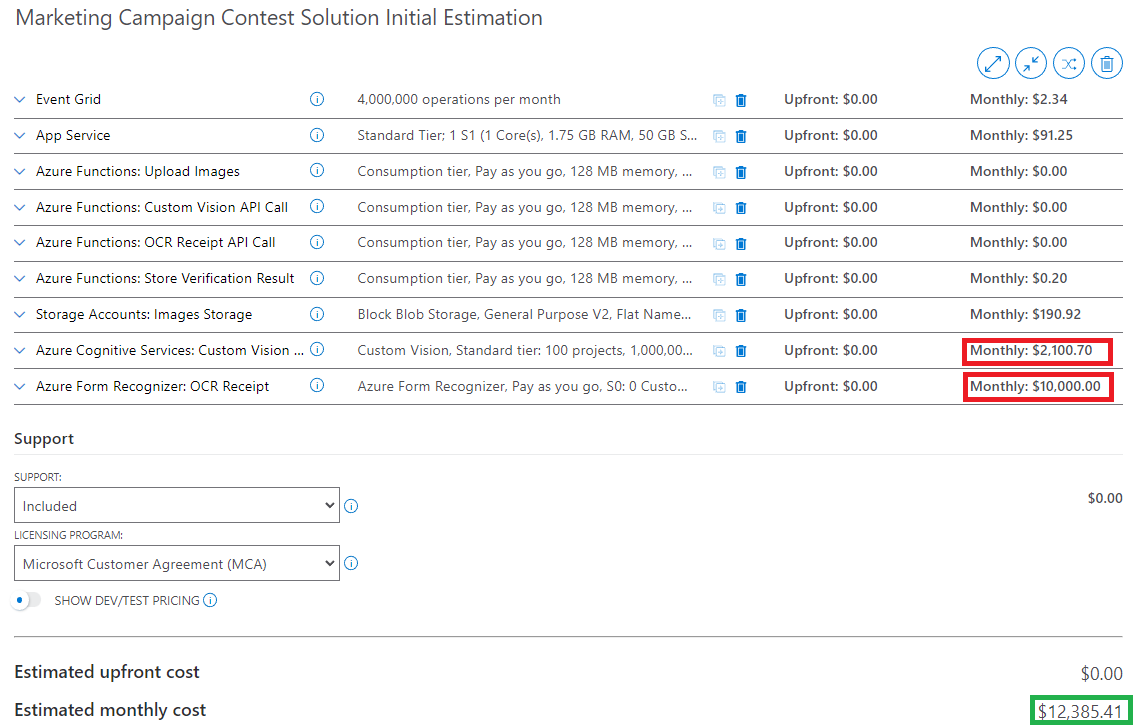

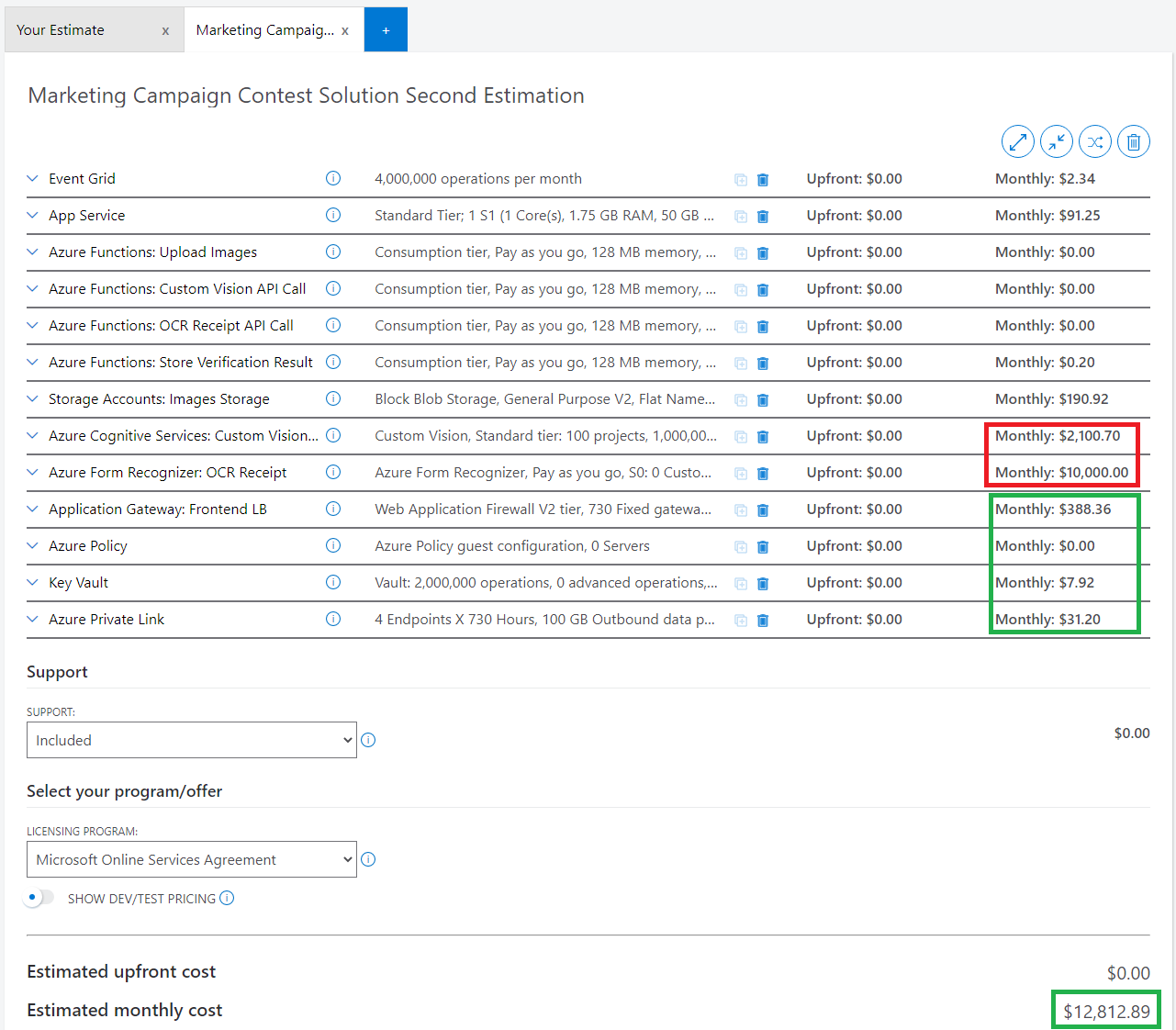

This is the initial estimation that contain the main workloads, you view the details on this link.

I considered that the marketing campaign is targeting one million user, and based on that I calculated the number of potential files, requests, and computation in general:

- The first 1000,000 request on Azure Functions are free of charge, that’s why all azure functions has zero cost because it’s unlikely to exceed the one million request. However, we have 4 functions, so I think the offer will be applied for one function per subscription

- Custom Vision API will cost $2100.70 for potential one million image to be predicted. We could optimize this cost if we decided to go with running our AI trained model on client side. on this link, I explained how to export the model to TensorFlowJs.

- Form Recognizer Service will cost $10,000.00 for potential one million receipts to be read! We should discuss that with the business and provide an alternative in case it’s over the budget. On this link, I mentioned one of the OCR open-source libraries that might be enough for our scenario. we should also make sure to estimate the computation power it will take before we decide.

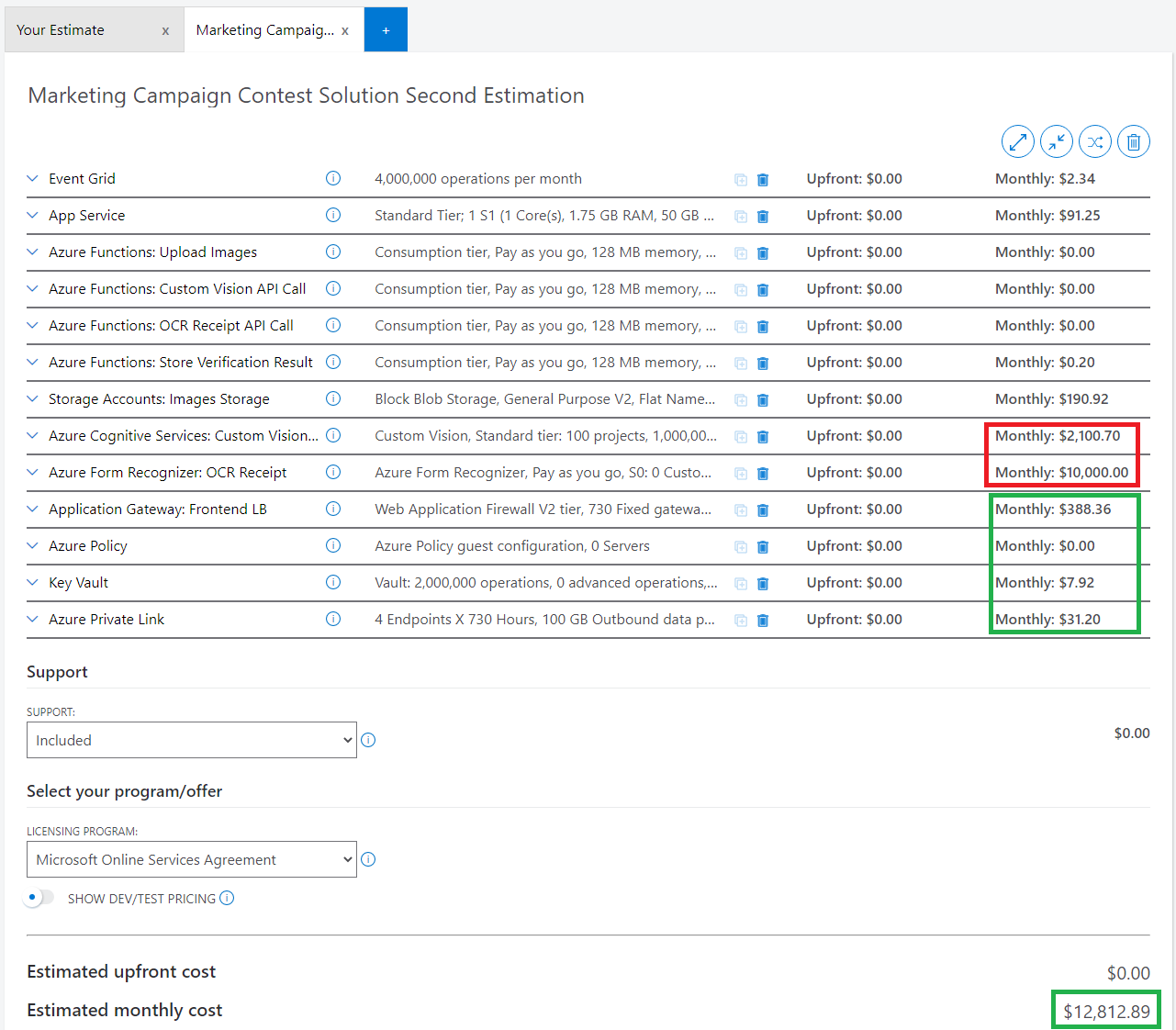

The estimation doesn’t have all workloads that we discussed on Security section, so let’s do the second estimation after adding the following resources

- Application Gateway

- Azure Policies

- Key Vault

- Virtual Network

- Private Endpoints

The new estimation doesn’t differ that match from the first one:

Define Budget and Alert

After discussing the estimation with the business, we can create a budget on Azure Portal and Alerts to monitor the consumptions.

Operations Excellence

I will mention just one side of the operations, it’s the CI/CD pipelines and Project Management, Azure DevOps can be used to host the code repos, CI/CD pipelines and project dashboard.

Performance Efficiency

The solution design took in consideration the performance, for example:

- I used stateless instances without affinity, and azure functions can scale out based on the consumption

- Auto scale feature is enabled for the app service based on the CPU usage

- Offloading the long running processing to other azure functions, so the http request will take the time of uploading the images only

- using Event Grid

Conclusions

Service Availability in Regions

| Service | UAE North | Central India |

| Custom Vision | Not Available | Yes |

| Form Recognizer | Yes | |

| Account Storage | Yes | |

| Azure Function | Yes | |

| Event Grid | Yes | |

| App Service | Yes | |

| Application Gateway | Yes | |

| Key Vault | Yes | |

| Application Insight | Yes |

Azure Services SLA

| Services | SLA |

| Custom Vision | 99.9% SLA link |

| Form Recognizer | 99.9% SLA link Note: I didn’t find a specific SLA for Form Recognizer, but it’s one of Azure Applied AI Services. |

| Account Storage | 99.9% for LRS (locally redundant storage) SLA link |

| Azure Function (Serverless) | 99.95% for consumption plan SLA link |

| Event Grid (Serverless) | 99.99% SLA link |

| App Service | 99.95% SLA link |

| Application Gateway | 99.95% SLA link |

| Key Vault | 99.99% SLA link |

Azure Calculator Cost Estimation

The Total Monthly Expenses is $12,812.89, as you can see the AI services have the most cost.

we can reduce the cost if do the following actions:

- Use the AI custom vision on frontside as part of the client-side validation, we can export the custom vision model, this link has the details: https://feras.blog/using-custom-vision-service-to-detect-objects-in-images

- Move from Azure Form Recognizer to another service that can be run on azure virtual machine. this link has some proposed open-source models: https://feras.blog/using-form-recognizer-service-to-extract-data-from-receipts

Azure Solution Architecture Diagram

Final words,

- Designing the solution is nothing but a tradeoff challenge between many factors.

- Designing the solution is a continuous activity! changing the requirements is inevitable, so does enhancing the design.

- After lunching the software, we should monitor and optimize.

- The following blog post explain how to create, train, and publish the custom vision model.

- The following blog post explain how to create and call the form recognizer service.