In this post, I will utilize 3 azure services to scale auctions online solution hosted on Azure.

Auctions Online!

Let’s suppose we have auctions online solution that should support hundreds of participating users on auction at the same time.

The followings are basic requirements that our solution have to support:

- Each user will provide the amount of the bid (money) and send the request to the server.

- The server will receive hundreds of requests simultaneously, do some business logic and store it in the database.

And this is our plan:

- Build ASP.NET Core web app that does the whole work:

- end

– point to receive the bids - execute the business logic

- insert the bid to the Database

- end

- Do load test for 1000 concurrent users for two minutes against the web app

- Improve the architecture to offload the intensive work from web app to background worker

- Repeat the Load test against the new architecture and compare the results with the first test

- Learn from the test result and improve the architecture

Let’s do it!

1- Build ASP.NET Core web app that do es it all

This is a monolithic architecture that represents our solution. The internet-facing web application will do the whole work. The application will use the following technologies:

- .NET Core 2.2

- ASP.NET CORE

- Entity Framework Core

- SQL Database

Here is a link to the code on GitHub. The project name is Auctions

public class Outbid

{

public Guid Id { get; set; }

[Required]

public Guid BidId { get; set; }

[Required]

public float Amount { get; set; }

}And here is the endpoint action that holds the whole work, basic business logic and database operations.

[HttpPost]

public async Task Post([FromBody] Outbid outbid)

{

try

{

if (ModelState.IsValid)

{

// Check if amount is not already existed

if (await _auctionsDbContext.Outbids.AnyAsync(o => o.BidId == outbid.BidId && o.Amount >= outbid.Amount))

{

//return StatusCode(400, $"someone already did outbid on this amount:{outbid.Amount} or more!");

}

outbid.Id = Guid.NewGuid();

await _auctionsDbContext.Outbids.AddAsync(outbid);

await _auctionsDbContext.SaveChangesAsync();

return Ok();

}

else

return StatusCode(400, ModelState);

}

catch (Exception exc)

{

throw;

}

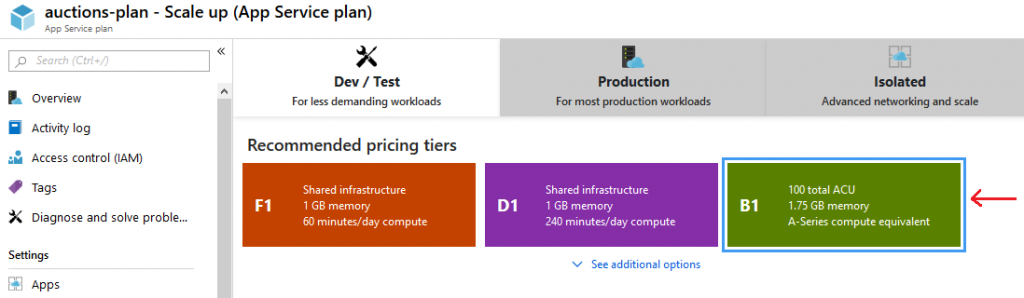

} After that, I deployed the code to Azure Web App with a

I created Azure SQL Database as well with the basic 5 DTUs.

Please note that I didn’t use a high pricing tier for my test experience just to know the ceiling capability of the architecture.

After we built and deployed the basic solution that will support bidding, let’s test the performance and validate if this architecture can meet the requirements or not.

2- Load test for 1000 users for two minutes

You can’t really make the right decision if you can’t measure what your application can do. That’s why achieving performance test is critically important for your application.

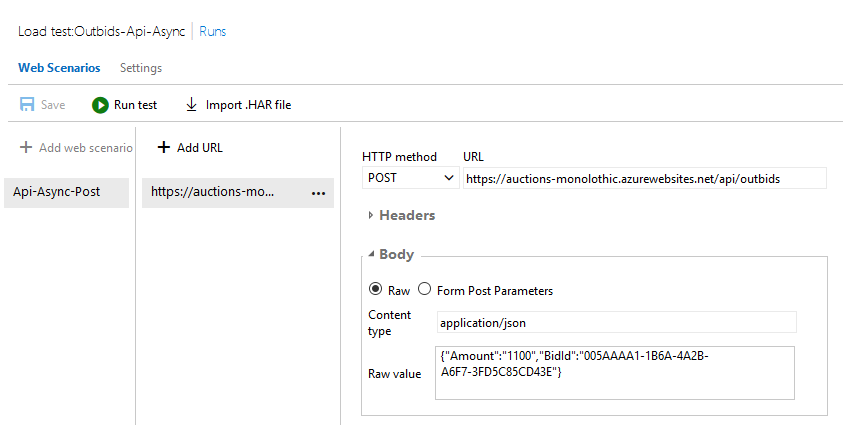

In this load test, I used Azure DevOps URL Based Load Test. Kindly note that this tool is deprecated and going to be closed. You can’t invest

Check this post to know more details about the deprecation and to find a good alternatives.

In Azure DevOps Url Based Test, I created a test that will make a call to the outbids API using the following settings :

- 1000 concurrent users

- two minutes

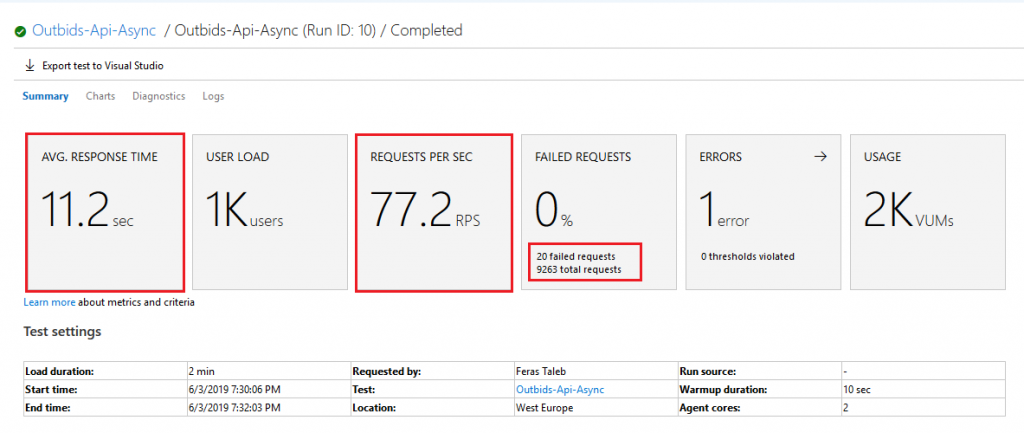

After executing the load test, I got the following results. take a look 🙂

- The average response time is 11.2 seconds which is pretty high

- The requests per seconds are 77.2

rps which is low comparing to 1000 users interacting at the same time. - The failed requests are just 20 which is a small number comparing to total requests

- The total requests are 9263 coming from 1000 concurrent virtual users.

3- Improve the architecture

Before you scale up the server. We need to solve the challenge by optimizing the architecture of the application. The front-end is doing all the work,

Let’s think of separating the work between different components and keep the front-end receives bids requests and send it to another component.

The second component will store the bids in a queue in order to send it for another component that will do the business logic.

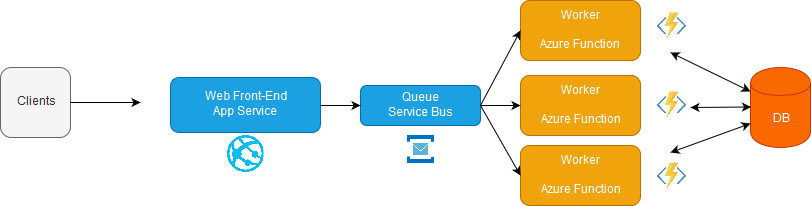

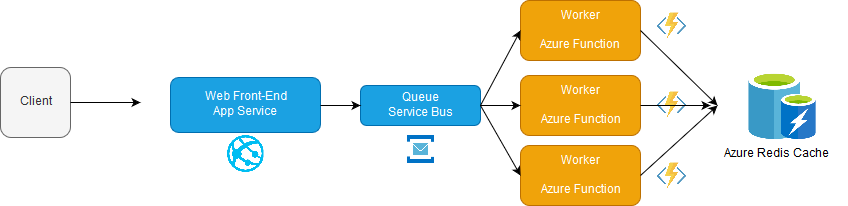

The following diagram represents the new architecture

- Web front-end to receive the bids

- Service Bus Queue that works a buffer to store the bids info as queue messages waiting for processing with first in first out manner

Azure function that is triggered by the service bus queue andreceive the message anddo es the business logic

The new architecture code is in the same GitHub Repository. The web front-end end-point becomes more concise and its only mission is to send the bid info to the service bus queue:

// POST api/values

[HttpPost]

public async Task Post([FromBody] Outbid outBidModel)

{

try

{

if (ModelState.IsValid)

{

var message = new Message(Encoding.UTF8.GetBytes(JsonConvert.SerializeObject(outBidModel)))

{

MessageId = Guid.NewGuid().ToString()

};

await _messagingBrokerSender.SendAsync(message);

return Ok();

}

else

return StatusCode(400, ModelState);

}

catch (Exception exc)

{

throw;

}

} The Azure functions app will receive the queue message, do the business logic and insert it to the database

[FunctionName("OutbidsWorker")]

public static async Task Run([ServiceBusTrigger("outbids", Connection = "Outbids_Queue")]Message myQueueItem, ILogger log)

{

var body = Encoding.UTF8.GetString(myQueueItem.Body);

log.LogInformation($"C# ServiceBus queue trigger function processed message: {body}");

var outbid = JsonConvert.DeserializeObject(body);

var cn = Environment.GetEnvironmentVariable("Outbids_SQLDbConnection");

using (SqlConnection conn = new SqlConnection(cn))

{

await conn.OpenAsync();

var sqlText = $"select Top 1 Id from outbids Where Amount >= {outbid.Amount} AND BidId ='{outbid.BidId}'";

var isExists = false;

using (SqlCommand cmd = new SqlCommand(sqlText, conn))

{

isExists = await cmd.ExecuteScalarAsync() != null;

log.LogInformation($"isExists value : {isExists}- For BidId:{outbid.BidId}");

}

//if (isExists)

// return;

outbid.Id = Guid.NewGuid();

var insertText = "INSERT INTO OUTBIDS(Id,BidId,Amount) VALUES(@Id,@BidId,@Amount);";

using(SqlCommand cmdInsert = new SqlCommand(insertText, conn))

{

cmdInsert.Parameters.Add(new SqlParameter("@Id", Guid.NewGuid()));

cmdInsert.Parameters.Add(new SqlParameter("@BidId", outbid.BidId));

cmdInsert.Parameters.Add(new SqlParameter("@Amount", outbid.Amount));

var affectedRows = await cmdInsert.ExecuteNonQueryAsync();

log.LogInformation($"affectedRows value : {affectedRows}- For BidId:{outbid.BidId}");

}

}

} After deploying the new solution to

4- Repeat the load test

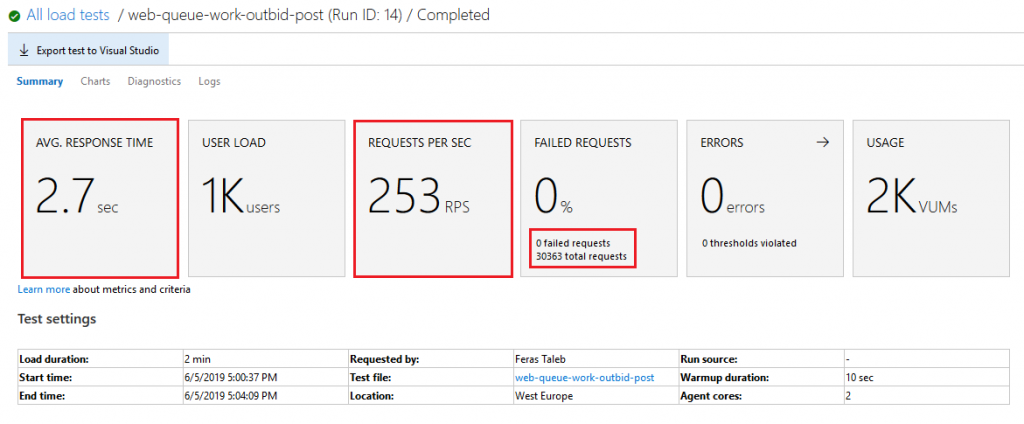

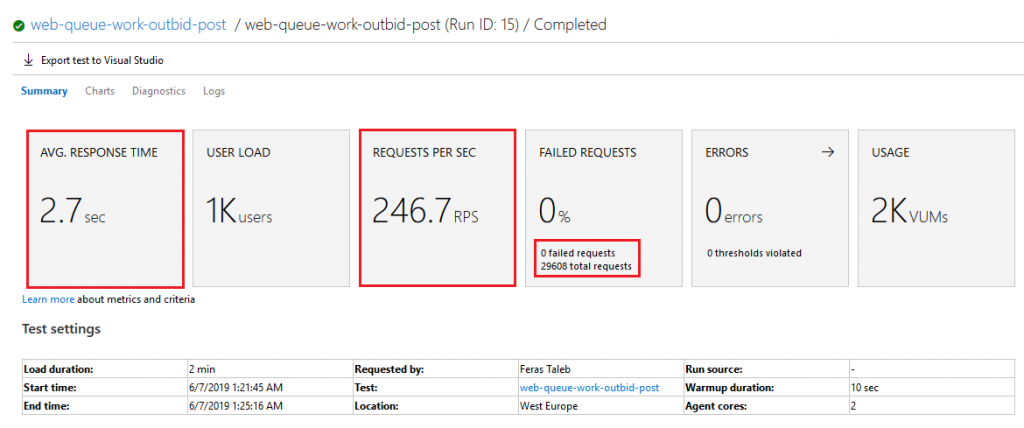

Here are the load test results for the web-queue-worker architecture

- The average response time is 2.7 seconds which is a good improvement

- The requests per seconds are 253

rps - The failed requests are 0

- The total requests

a re 30363 coming from 1000 concurrent virtual users.

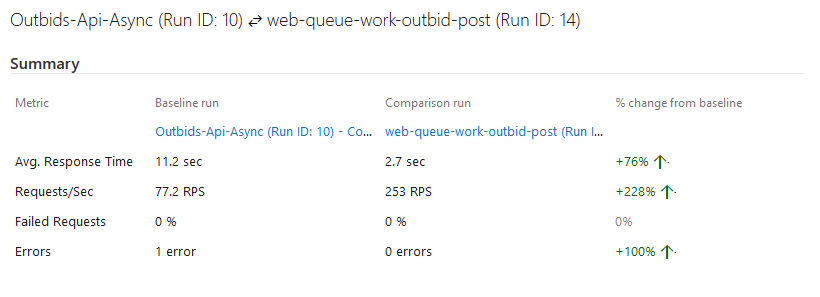

Let’s review the two load test results together

Cool! mission accomplished! our system now can handle 1000 users concurrently with 2.7 seconds as an average for each request.

2.7 seconds should be optimized more while the system grows. For now, It’s acceptable since we know that our online solution customers’ base is 1000 users.

We don’t have a busy front-end any more.

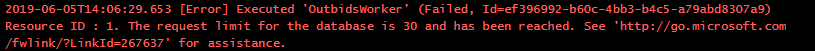

But wait! I noticed that azure function app failed to connect to the SQL database in almost the half requests!

I also did a query on the database table and I got 17318 rows only for 30363 successful bids from the users.

So the new front-end along with the service bus and azure function can meet the requirements of handling 1000 users but we have a busy database because of the extraneous fetching that we do on the SQL database. It is because we query the top bidding amount in each request which is considered as a Chatty I/O operation.

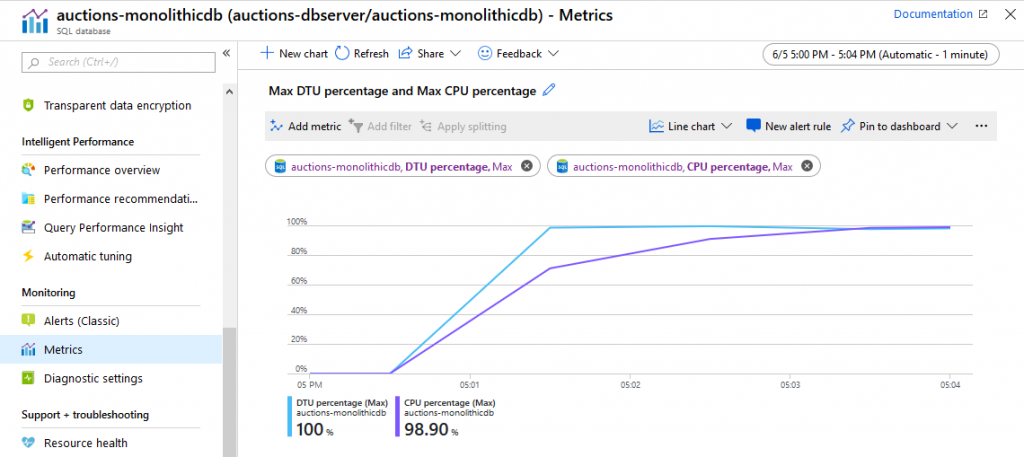

Take a look please on the DTU and CPU usage of the SQL Database.

Our Architecture still needs improvements on Database side 🙁

We have two main choices:

- Scale up the database: but what should we do when we receive thousands or hundreds of thousands of users in the future? should we scale up the database again!

- Optimize the architecture design and minimize the I/O requests.

Let’s optimize the architecture by using another database type that supports more throughput with low latency.

That’s Right! What you said is totally right. It is the In

Let’s use Azure Redis Cache instead of SQL database while the bid is on. When the

This is a link about how to create Redis in Azure.

So here is the new architecture design after adding Azure Redis cache

I installed the nuget package (StackExchange.Redis) and I rewrote the azure function to connect to Redis.

[FunctionName("OutbidsWorker")]

public static async Task Run([ServiceBusTrigger("outbids", Connection = "Outbids_Queue")]Message myQueueItem, ILogger log)

{

var body = Encoding.UTF8.GetString(myQueueItem.Body);

log.LogInformation($"C# ServiceBus queue trigger function processed message: {body}");

var outbid = JsonConvert.DeserializeObject(body);

var bidsListKey = $"bids{outbid.BidId}";

var topOutbidKey = $"TopOutbid{outbid.BidId}";

IDatabase cache = lazyConnection.Value.GetDatabase();

var topOutbid = await cache.StringGetAsync(topOutbidKey);

if (topOutbid.HasValue)

{

var topAmount = Convert.ToSingle(topOutbid.ToString());

if (topAmount >= outbid.Amount)

{

// ignore this outbid

//return;

}

}

// first bid ever when there is no key for the bid

await cache.StringSetAsync(topOutbidKey, outbid.Amount);

outbid.Id = Guid.NewGuid();

var jsonOutbid = JsonConvert.SerializeObject(outbid);

await cache.ListLeftPushAsync(bidsListKey, jsonOutbid);

} I redeployed the new azure function app that gets the queue message, does the business logic and store the bid in the Redis cache.

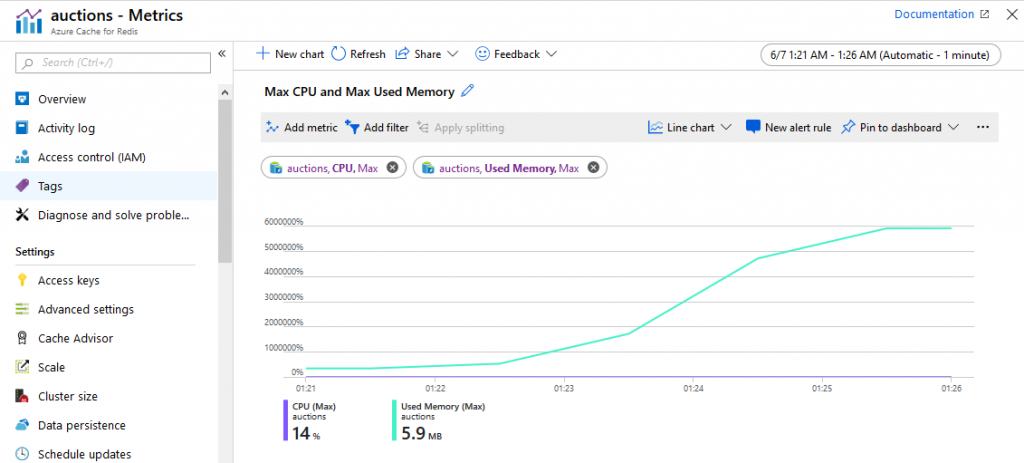

Then I executed the load test again and here are the result:

The result is almost the same as the previous because there is no change in the front-end side. However, the number of handled bids are 29608 which means no dropped bids.

It is worth mentioning that we used the basic C0 250 MB pricing tier for Redis cache. Its cost when I prepared this post was $0.022/hour.

This the CPU and Memory Usage for the Redis account that I used. I still have a lot of available usage.😎

Now we can say mission accomplished 🙂

Recap

- The main target was to keep the front end responsive as quick as possible to serve the 1000 users.

- We achieved this goal by reducing the average responsive time from 11.2 seconds to 2.7 seconds

- We noticed that t

h e SQL database reached the maximum limit of requests and started to drop the bids. - We used 3 azure services to improve the solution performance

- Service Bus Queue

- Azure Function

- Azure Redis Cache

Final Thoughts!

- The Performance test is an essential part to validate your architecture design.

- After launching, You have to monitor and measure your system so you can expand and improve your architecture as you grow.

- Do more load test to reach the ceiling of the architecture to get the maximum number of concurrent users that the solution can handle.

- More components and more technologies need a more experienced team to handle that

- Pricing is an important parameter that affects the architecture change decision.

- Before you scale up,

make the solution architecture scalable. - Measuring and Testing is the key

That’s all! I hope you have enjoyed it.